Artificial intelligence (AI) and machine learning are at the forefront of a technological revolution that affects virtually every facet of society. From the voice of Siri or Alexa to autonomous self-driving cars, machine learning is permeating into people’s daily lives and professions, including that of policing. The emergence of predictive policing as a crime prevention and crime interdiction strategy is illustrative of this trend. Although many police agencies around the world have implemented or plan to adopt predictive policing, this trend has not been without controversy, with critics and civil rights groups raising concerns over the use of big data, along with the challenges surrounding the analytics. These concerns include biases in the underlying data, the perceived “black box” functioning of the model, and the way the technology is applied within an organization. Due to these conflicting perspectives, predictive policing is a source of both optimism and concern for law enforcement and the public at large.

It is in this context that Vancouver Police Department (VPD) has implemented an unsupervised machine learning algorithm. The lessons learned in the VPD’s journey to apply this new strategy in an ethical and transparent way can help other law enforcement agencies who also want to explore the opportunities offered by advancing technology.

In the Beginning

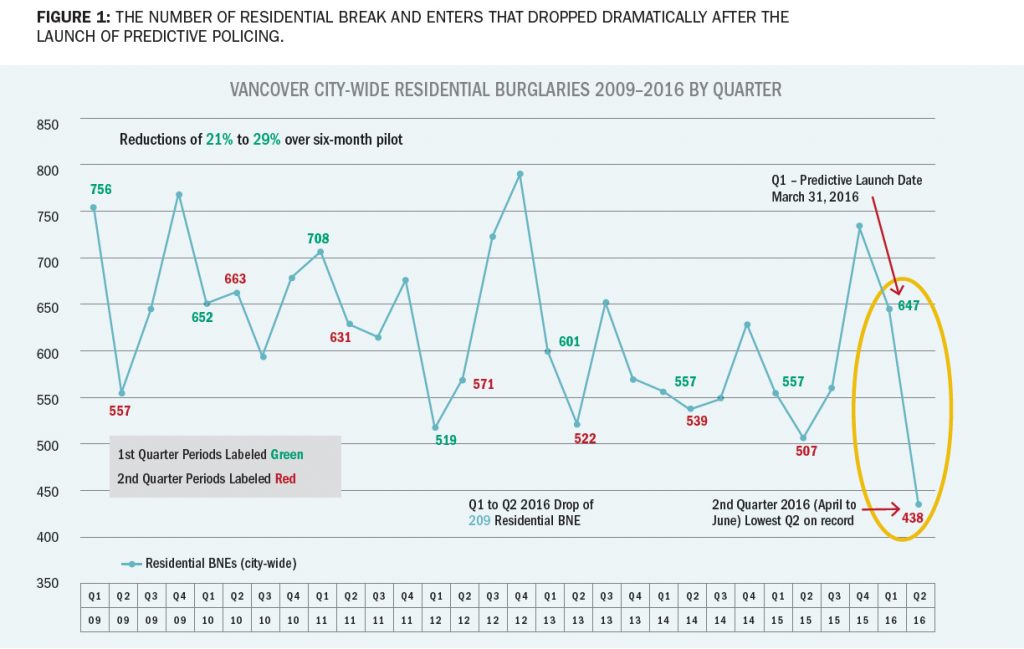

VPD’s predictive policing project started with geospatial engineers and statisticians from academia who worked in partnership with the VPD to develop a machine learning system that could identify property crime patterns. The end result was a forecasting system that could predict a crime location within a 100-meter (300-foot) square at two-hour intervals. The algorithm was designed to continually learn by reviewing data and outcomes and improving its performance through regularly scheduled retraining cycles. The system has proven to be an effective tool in tackling property crime. During the six-month pilot project in which the system was used to direct enforcement activities, burglaries were reduced by more than 20 percent month over month. The pilot started at the end of the first quarter of 2016, which had recorded the highest number of residential burglaries in 20 years. By the second quarter, VPD witnessed the most significant drop in property crime that was ever recorded in its jurisdiction, resulting in the lowest number of residential burglaries in the past 25 years. Due to the significant results achieved, dedicated teams using this approach now form a part of an overall crime reduction strategy.

The VPD crime forecasting targets only locations for resource deployments and never an individual, which is a key distinction from people-based predictions. The solution benefits from decades of applying location intelligence to address crime in Vancouver. The consistent use of geospatial information has helped to create the necessary infrastructure and supporting data that underpin the system, resulting in accurate business intelligence and crime analytics that provide a better understanding of the underlying causes of crime issues. Tied to the growth in the use of big data has been a parallel growth in geographic information systems (GIS) being used by law enforcement and the resulting features and capacities that this brings to the forefront. It is now commonplace for police services to have a robust GIS infrastructure already in place (e.g., AutoCAD, GeoMedia). Capitalizing on these advancements and internal capacity, the VPD was well positioned to move forward with a transition from traditional hotspot policing to a proactive stance that is intelligence led and informed by evidence-based decision-making.

When exploring the viability of implementing predictive policing within a law enforcement agency, the discussion must first turn to whether the necessary infrastructure is in place and whether the agency has access to the “right data” for a forecasting model to function properly. The term “right data” includes not only data sources that contain the relevant information, but also a sufficient volume of data that has undergone the necessary rigor to ensure consistency in coding, adherence to standardized policies for collection, and best practices in quality control and data integrity.

The application of machine learning, predictive technology is dependent on two key requirements. First, the police service must have the commensurate technology in place to support the implementation of advanced statistical and data mining tools that can process millions of calculations across the terabytes of data required for a machine learning process to function. Second, if the agency is in possession of the necessary “big data” source, the data must be in a form and quality that facilitates the training of a predictive model. This requires the data to be free of errors over the entire range they have been collected, and, equally important, the initial coding and collection of the data must have been done in a consistent manner, with no variations in the standards and criteria that define each variable. The adage “garbage in, garbage out” has even greater significance when it comes to the use of machine learning technology and developing predictive outputs.

When considering the implementation of some form of predictive policing, an organization must first reflect on whether the output from the technology will realistically improve expected outcomes and decision-making or whether revisions and enhancements to the current process might be expected to have similar, if not better, results. If the decision is to proceed with a predictive policing initiative, then sufficient time and resources must be allocated to develop and implement data quality standards, quality control processes, and training for those responsible for generating the data. In law enforcement, the root cause of most data quality issues resides with the officer inputting the variables, in the form of police incident reports nested in a records management system (RMS).

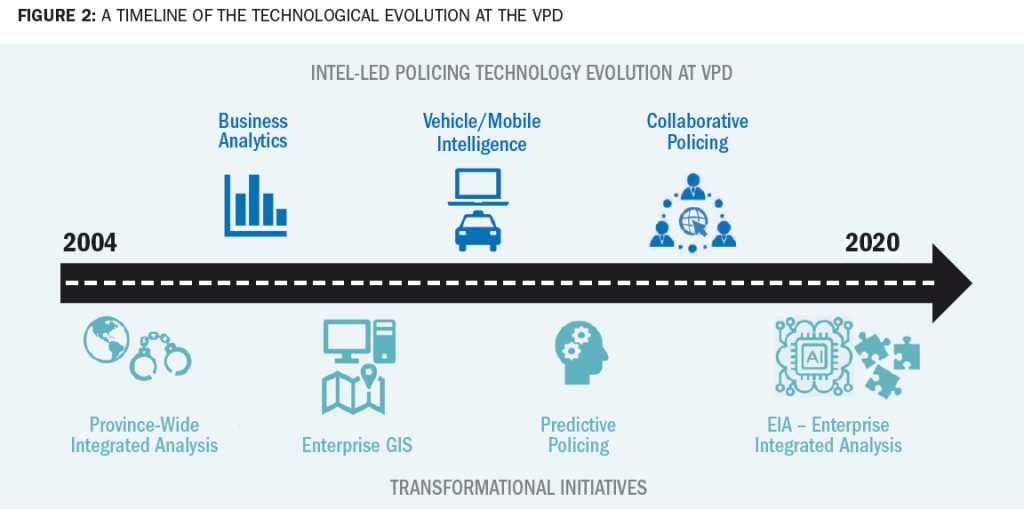

In tackling some of these challenges, the VPD has been continually developing technology and infrastructure, starting in early 2000 with the introduction of a province-wide integrated analytic system. From this initial development, the department then progressed through business analytics; enterprise GIS; predictive policing; and, most recently, the implementation of an enterprise integrated analytic platform that can mine unstructured data and identify patterns buried deep within police systems. At every step in the technological evolution, the VPD has also kept pace with advancements in data quality checks, records clearance review processes, and the application of strict coding and supervisory oversight for every police incident file. This parallel stream helped to ensure both the technology and the underlying records used by the systems met stringent quality control standards. The timeline in Figure 2 illustrates this progression.

Marginalized Communities and Crime Forecasting

Traditional adoption of new technology, such as radio systems, rarely require a retroactive review of business processes to ensure past practices do not inadvertently impact a newly implemented machine learning system. However, predictive policing requires carefully calibrated measurements and large volumes of unbiased data that have been deduped and error corrected in order to support machine learning. Data quality can be the enemy of predictive policing, where unforeseen systemic issues related to quality control, standardized coding, and inherent bias can affect the success of the implementation. These issues are difficult to control for and often derail organizations looking to increase productivity with machine learning processes. For example, in those circumstances where the data contain location bias and the information is used to generate forecasts, the subsequent deployment of police resources will further reinforce and accentuate this issue. In this situation, the common outcome is for the predictive system to increase the police presence in those areas where the police were originally active and engaged in crime control measures. The data collected continue to corroborate the overrepresentation of resources and the machine learning reinforces this bias. Ultimately, predictive programs are only as good as the data they are trained on.

For the VPD, great care was taken to ensure adequate measures were put in place to help safeguard and ensure bias-free data as much as possible. At the onset, the VPD sought to address potential issues, such as an unintended outcome of targeting of community members, whereby predictive technology could result in over-policing of marginalized or ethnically diverse neighborhoods. Closely tied to this preventative strategy is the overall ethical application of the technology and ensuring both respect for privacy rights and transparency in the deployment of police resources.

The VPD took great lengths to consider civil rights and the protection of privacy by not collecting information on individuals, only locations. In this regard, the VPD has also been cognizant of working with marginalized communities. For instance, Vancouver’s Downtown Eastside (DTES) includes some of the most marginalized and vulnerable populations in Canada, with people facing challenges that include poverty, mental health, and substance abuse. To better address the needs of this very diverse community, a community policing approach that focuses on harm reduction and one-on-one engagement is being used instead of predictive policing. The police work with mental health practitioners who are embedded within specialty police teams, working jointly with community nurses, addiction counselors, Coastal Health Authority, and community support groups and nonprofit services. These units provide dedicated resources geared toward providing a better service delivery to that client group in the DTES neighborhood.

Given the challenges that the people living in the DTES are experiencing, a strictly enforcement and deterrent approach does not mesh or complement the other support strategies that are in place within that community. As such, it was decided that dedicated beat team resources and community policing programs were best suited for engaging with the members of the community in the DTES and the use of predictive policing may in fact be counterproductive for this client group.

In contrast, predictive policing, as it is applied by VPD, is premised on deterrence and proactive interdiction strategies, whereby police officers and community safety officers deploy to areas that have been identified as having a greater probability of experiencing a property crime incident—specifically, residential burglaries. Police resources, guided by the system, capitalize on the deterrent effect a of high-visibility police presence.

Auditing Opaque Processes with an Eye on Ethics

Like many machine learning algorithms, the one utilized at the VPD, is unsupervised, rewriting itself every two to four weeks; thus, the specifics of what exactly the algorithm calculated or weighed at each specific time interval is often unknown. These limitations have contributed to the criticism that many crime forecasting systems represent a “black box,” whereby it is impossible to determine how an output was arrived at.

The core algorithm adjusts to nuances and patterns that are emerging, then uses them to calculate daily forecasts. The details of what is being factored into the calculations and the weight of each of these factors are subject to change from moment to moment. Because of this limitation in the ability to actively monitor how the algorithm is weighting specific factors, system outputs and subsequent resource outcomes are regularly examined. Specifically, the potential issue of police overrepresentation in an area is monitored. In lieu of the ability to trace the algorithmic process used to arrive at a specific forecast, the VPD instead relies on regularly scheduled audits of model outputs, policing outcomes, and performance benchmarking to determine whether the system is behaving as expected and to provide an early warning of any potential issues that could possibly arise. For the units sent to forecasted locations outside the marginalized areas, exact locations and durations are recorded. In addition, a detailed template within the RMS is filled out by the officers each time they are deployed to a forecasted location. The electronic RMS template and location tracking document the officers’ actions, enabling an evaluation that can be conducted on the frequency of time and location factors on a regular basis.

Data Transparency and the Next Steps

As well as learning its own lessons along the way, VPD also benefited and adapted its approach based on the lessons learned from other police services that have implemented crime forecasting systems. Many departments have faced legal challenges because they run afoul of civil rights and civil liberties principles — a significant risk with predictive policing. In several studies, data from patrols, arrests, convictions and other data tied to the criminal justice system have been shown to have an inherent bias. For example, focusing on potential offenders based on their associations within the community, connections to relatives with a criminal history, and officer perceptions can result in unjustifiably criminalizing people by subjecting them to intense police scrutiny. This could also contribute to a cyclical pattern created by predictive systems, which can further perpetuate the inherent bias that created the initial police scrutiny.

As previously noted, the VPD avoids some of these pitfalls by exclusively focusing on locations and not persons. Taken a step further, VPD intentionally excludes the use of police-generated data, and instead processed only citizen-generated property crime incidents. While community-generated data can still contain levels of bias and prejudices, removing police-generated data from the system inputs helps to control any underlying organizational issues, should they exist. While the VPD has a long history of positive community engagement and proactive participation with marginalized members of the community, the added step of removing any perceptions of police bias helps to strengthen public trust in the use of the technology. As the data source is derived from the community, there is a better chance for the outcomes generated to be perceived as less biased and police-centric than if they were generated only from police data.

The VPD has received many inquiries regarding the application of big data and crime forecasting in its operations. As a result, VPD has actively engaged with civil liberties associations, privacy advocates, journalists, and academics to achieve greater transparency. One recent example is the establishment of an open data catalog, where the public can visit the VPD website to download and independently analyze police data spanning the last 16 years. These open data include the time period when crime forecasting was implemented, and it is available to anyone on the Internet.

Given the ethical and civil rights implications in the use of this technology, there is in fact considerable risk and liability exposure for police services. It is hoped that other police agencies interested in machine learning and crime predictions will use the experiences and practices that have been learned through the VPD implementation. However, this work is ongoing and constantly evolving. There is still much to be done to improve on communication, community engagement, and transparency of the systems being used. As such, the development of a police industry code of conduct for this type of project that has undergone an independent and objective review process would help to ensure public confidence and protect against any technical engagements that might bring controversy to an organization. While the Canadian-developed Montreal Declaration for the Responsible Development of Artificial Intelligence imparts a broad ethical framework for industry and public bodies, including guiding principles and human-centric values in the use of technology, it does not provide specifics as to how to achieve this in a real and pragmatic way.1 For police services that are engaged in the use of machine learning algorithms, a detailed checklist of mandatory practices is needed, ranging from independent ethical review mechanisms to integrity checks for data bias, including a minimum standard for reviewing algorithms for inherent programming bias. Tied to this should also be specifics as to what level of transparency is required concerning the functioning of the algorithms and the parameters for verifying and testing AI prior to usage. These are very real issues within the policing community, and there are currently no standard practices that speak to these necessities. The VPD is currently working with law enforcement partners, government representatives, and industry working groups to help fill this gap.

In summary, many of the issues related to civil rights violations and the over policing of marginalized and ethnic minority communities were identified early on in the project and steps were taken to mitigate or avoid these controversial issues. Focusing exclusively on property crime, excluding police-generated reports from the data importation process, and implementing specific solutions to guard against crime forecast hotspots in marginalized segments of Vancouver have all helped to ensure a more ethical and less biased forecasting system.

Note:

1Université de Montréal, Montreal Declaration for the Responsible Development of Artificial Intelligence (2017).

Please cite as

Ryan Prox, “Lessons Learned on Implementing Big Data Machine Learning: The Case of Predictive Policing at the Vancouver Police Department,” Police Chief 87, no. 3 (March 2020): 46–51.